A Gentle (theoretical) introduction to Kafka

All you need to know to start working with Kafka.

Table of Contents:

- What is Kafka?

- Why use it?

- Kafka as a message broker

- Minimal Kafka Vocabulary

- Events

- Topics

- Producers

- Consumers

- Brokers

- Some innovations of Kafka vs Other Message Brokers

1. WHAT IS KAFKA?

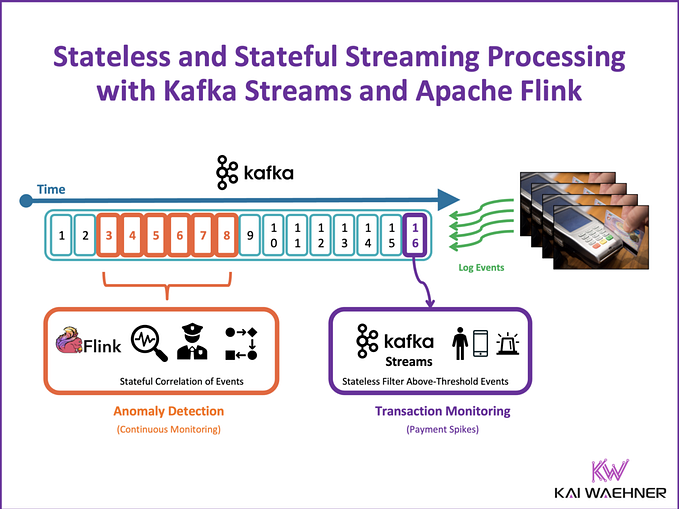

Kafka is a distributed event streaming platform. It’s distributed as Kafka can live on multiple machines. It’s called event streaming as it is processing events (i.e. messages) of something that happened (sent as a key/value pair). The messages are processed as a stream of events, which means that there is no schedule but they can be handled as they arrive.

2. WHY USE IT?

Kafka was initially conceived as a messaging queue (2011 by LinkedIn).

A message queue is a service that allows you to buffer your flow of data. An application sends messages in, the messages get buffered, and another service reads those messages. This allows you not to process data as you receive them but to temporarily store them and process them later.

Todays with Kafka you can:

- ingest data in real-time

- manipulate them

- store and retrieve

- route the event streams to different destination

Let’s make an example.

You build an app to rent books. When a user places an order, your system saves a new row in a database and sends a confirmation email. It looks something like this:

Well, good news, app owner! The book business is booming. You decide to add a new product: a Movie app. Now, if a user rents a movie, you want to keep track of it in a different database. Moreover, you also added a Machine Learning model to create personalised recommendations. Proud of job, now you look at your system design and you find this:

Quite messy, uh?

The truth is that when you increase the number of services, the connections become more and more complex. Kafka was created to solve this problem.

Good news though... You are not alone. Look at LinkedIn data architecture in 2013 😅

How can Kafka help you? It can bring some order. Something like this:

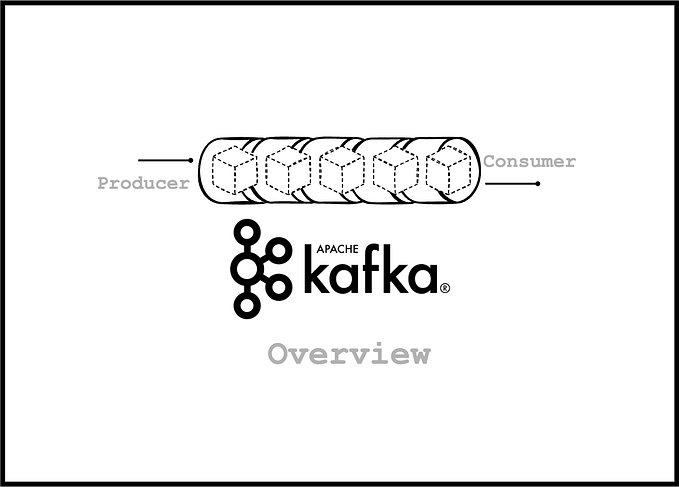

3. KAFKA AS A MESSAGE BROKER

One of the ways Kafka helps with multiple connections is when it is used is as a “message broker”.

What is a message broker? A message broker is a service that simplifies the communication of data between producers (the parts of your system that generate data) and consumers (the parts of your system that instead ingest/receive data).

This offers the advantage of providing temporary storage. Every business has its peak hours. If you were to send data directly to the database, it could be that the system can not handle the high volume. Are you going to let your customers walk away frustrated when your queue takes too long to process? Probably not. A better idea would be to decouple the ingest/processing part from the storage one.

4. MINIMAL KAFKA VOCABULARY

- Events/Messages: the messages of something that occurred.

- Topics: a collection of messages of the same type. The producers send messages to the topics, the consumers subscribe to the topics and receive the messages.

- Producers: a client application that creates events and publishes to a Kafka topic.

- Brokers: the Kafka servers that handle the data.

- Consumers: a client application that subscribes to a topic and reads the data stored in the broker.

5. EVENTS in Kafka

Events (i.e messages) are the basic unit of Kafka streaming. They can be anything that you want to stream from one service to another.

They are stored as byte arrays and are made of a couple of key/value and a timestamp.

The key is optional, it’s not unique and it can be sent as null. The timestamp can also be sent as null. If that’s the case, Kafka will automatically add one.

Even if the key is not mandatory, if you care about the order of consuming the messages, it might be a good idea to send the events to the same partition using the same key.

There is a maximum size of the message that you can send.

Finally, you can set different parameters for delivery guarantees: exactly 1, at least 1, at most 1 (i.e. the message can be dropped).

6. TOPICS in Kafka

As we said, topics can be thought of as containers of messages of the same type. You can create as many topics as you want (e.g. one topic for book orders, another one for movie orders, etc.). Topics “live” on brokers.

A topic is then composed of one or more partitions. Those partitions are handled by the brokers and are typically replicated across the cluster.

The producer writes messages to a topic in append mode while the consumer reads the messages specifying an offset and moving forward (more about it later).

A partition contains one or more messages.

Why are topics divided into partitions? For scalability reasons: by diving topics in partitions, it means that we can save each of them on a different node.

7. PRODUCERS in KAFKA

A producer is the service that produces (i.e. sends) messages to Kafka. We saw that the producer publishes the messages to a topic. In which partition will the message fall?

The partition is determined with this formula:

message_partition = hash(message_key) % partition_number

Kafka applies a hash function to the key of the message and then takes the module with the number of partitions. If a message doesn’t have a key specified, Kafka will automatically try to make the partition balanced among themselves.

ACKS: How you manage connectivity issues/faulty brokers

To prevent things from going wrong without you noticing, Kafka allows you to set an acknowledge strategy.

The 3 options that you have are:

- acks=0: the producer sends the message to the broker and doesn’t wait for a response. This is a fast setup but if something goes wrong (e.g. the cluster dies and it can’t process the message), you might lose the data and get no notification.

- acks=1: the broker will send a confirmation message. This is safer but a bit slower.

- acks=all: you get a message not only when the broker receives the message but also when it finishes replicating it on the other brokers. This waits until all the followers are in sync with the master. Of course, this makes sense only if you have a replication factor (e.g. in the example there is a replication factor of 2).

Good to know: if you get a TimeoutExpection it typically means that the broker is busy while if you get a SerializationException it means that the message is sent in an unexpected format.

8. CONSUMERS in Kafka

Consumers are the services that consume (i.e. receive) the messages.

A consumer can subscribe to one or more topics.

There is a one-to-many relationship between consumer to partitions:

A consumer can read one or more partitions but a partition can not be split among different consumers

This means that if you allocate more consumers than the number of partitions, some consumers will remain idle.

Imagine you start with this situation:

You then add a some consumers > number of partitions:

This is a bad setup as one consumer will remain idle.

Finally, consumers are then often bundled into a “group”. You can think of a group as an application with the different consumers being multiple instances of the same application.

More groups can be consuming the same topic.

To go back to the first business example, the topic could contain messages of book orders and the two applications subscribing to it could be the email notification and the recommendation model.

The Offset: how Kafka keeps track of which messages needs to be read

For each consumer group and each topic, Kafka is saving the “offset” of the partition.

Imagine you are reading a book. After a few pages, you are tired and put a bookmark. In this way, once you come back, you will know exactly where to start from. You could also have another person picking up your reading from where you left off.

The offset works exactly in this way. It allows a consumer to continue processing the messages from where it left (imagine the consumer was shut off ) or it allows to add a new consumer that will pick up from the offset of the partition.

Offsets are particularly important if you think that you might want to add/remove consumers depending on the hours of the day (e.g. during peak hours). Moreover, Kafka has a pull architecture which means that messages are stored until they are “pulled” by the consumer.

This pull feature and the offset are extremely convenient as they allow you to add/remove resources (especially if you have a cloud architecture) without the risk of losing messages while doing this.

Finally, the offset is automatically committed every x seconds. You could change this and set up a smaller/bigger interval or decide to commit the offset after the consumer has read the messages. This is important when you don’t want to have duplicated reads. For example, imagine that the offset gets committed every 5 seconds. Your consumer crashes at 2.5 seconds. The messages that were already consumed will be re-process. You might want to avoid this.

9. BROKERS NODES in KAFKA

Kafka is set up as a cluster of nodes. You can think of it as a bunch of computers piled up in a rack and networked together.

The minimal set-up of Kafka would include:

- a computer that serves as a broker node that is responsible for receiving the messages, storing them on disk, and redirecting them to the consumers.

- a computer that serves as a zookeeper node (up until version 2.7.0) which is responsible for keeping the system synchronised.

You can imagine them like this:

Or if you prefer some level of abstraction:

You could also have additional components such as a Schema Registry or Kafka Connect.

10. SOME INNOVATIONS OF KAFKA vs OTHER MESSAGE BROKERS

- Kafka is set up as a cluster of nodes. This means that it can be scaled easily (you add/subtract nodes following your business growth).

- Kafka has a pull architecture. Consumers pull the data just when they need them. This is possible because the messages are persisted on disk — you set a given retention of time or storage limit.

- Data can be re-processed. You can do this by resetting the offset (more about it later).

The fact that Kafka has a pull architecture is particularly handy when you have to do updates on the consumer’s side. In those situations, you can stop the consumer, do the updates, and then put it back online. It will start reading the messages from where it left.

Re-processing of data might instead be useful if you find that your consumer has a bug or if you introduce a new feature (e.g. you want to re-read the data of the past week).

References

Introduzione as Apache Kafka | Dario Balinzo

https://learndataengineering.com/