Effective Software Testing: Test Types and Usages

It’s pretty common to hear about the testing pyramid or that we should be focusing on unit tests and not end to end tests. But what are the types of tests out there? How do we actually ensure robust coverage? What are the different types of tests good for?

Types of Tests

When we started building new microservices at Box, we decided it was a good opportunity to re-evaluate our testing strategy. We wanted to make sure that at least our new things had a robust test strategy. A lot of what we did, largely aligns with the Martin Fowler/Thoughtworks approach to testing microservices. To summarize here, the idea is to have a bunch of different types of tests — unit tests, integration tests, component tests, contract tests and end to end tests — to be used in different ways. All of these terms are used to mean a lot of different things by different teams, so this is how we defined them (which, again, largely matches Thoughtworks):

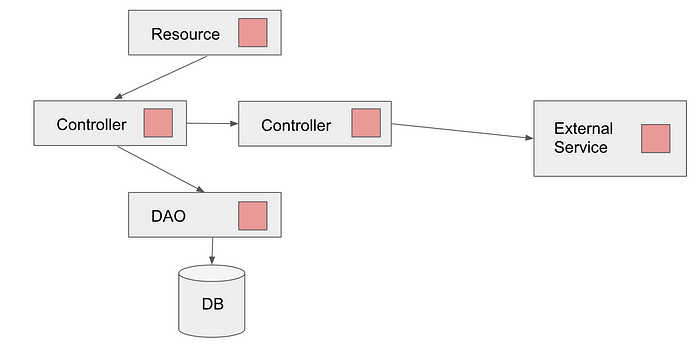

- Unit Test: Tests only a single small unit. In general, everything is mocked out, including any external dependencies (databases, authentication servers, etc.). For the most part, these tests cover the code in a single class, and therefore we would have one test class for each code class. We aimed for at least 80% code coverage with unit tests alone.

- Integration Tests: Integration tests are called by many different names and they also have the most different definitions. I tend to take a somewhat narrow view of these, where they are only slightly more encompassing than unit tests. By my definition, a single test will only test one integration at a time and any other integrations are mocked out.

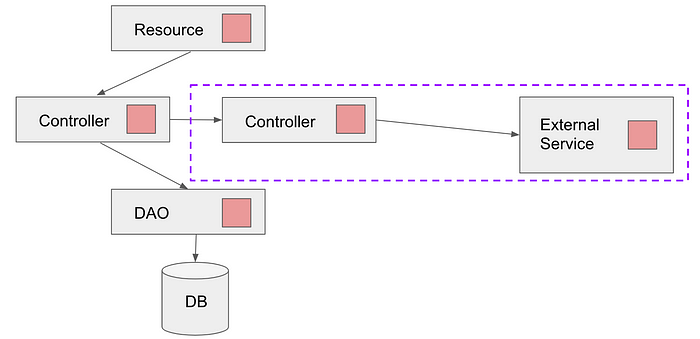

- Contract Tests: These are a natural extension of integration tests. They also test an integration point, but instead of making a call through the integration, a contract is created that is shared between both halves and then that contract is tested by each system. Typically, these are less rigorous than integration tests since they don’t directly test the integration. However, they do allow completely separate services to be able to test their part of an integration without a direct dependency on the other service for the test. To test that integration, I don’t have to actually run the other service or run that service’s tests to see if the integration is working. The only thing the services have to share is the contract definition, which shouldn’t change very often.

- Component Tests: The idea of these is to test larger sections of a system while minimizing outside variables and usually eliminating the slowest parts of the system. So they might do something like replace the entire DB with something in memory, but otherwise be almost end to end tests. In a microservices architecture, a component could include multiple closely tied services.

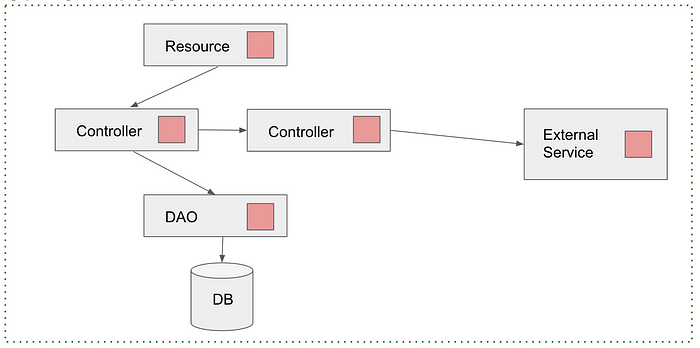

- End to End Tests: These test the full system. Typically they will either be UI end to end tests (using something like Selenium that will simulate button clicks on a UI), or API level tests, hitting the public APIs. In the case of our microservice, we used this to mean any test that hit any of our exposed endpoints, goes all the way through the system (including calls to other services) and returns. Some of these were endpoints that were only accessible internally by other services, but since they were the outer edge of our system, we considered them end to end tests.

Applying These Tests to a Monolith

While all of these test definitions and descriptions were assembled with microservices in mind, it largely still applies to a monolith. In a monolith, unit tests and end to end tests will translate directly. With unit tests, you’re still testing single classes and with end to end tests, you should still be hitting the public interfaces, running through the entire system and checking the results. Where things start to get a bit more fuzzy is with the other tests.

The main advantage to contract tests is that they allow you to define and enforce an interface between components owned and maintained separately. They allow service A to be able to test and release without running service B and still be relatively confident that they didn’t break the interface between the two. In the case of a monolith, both sections would be running anyway and it’s often the case that even if there are ownership boundaries, there’s much more of a culture of teams modifying each other’s code. As a result, contract tests largely don’t make sense for anything inside a monolith. If you have a monolith and a few external services, they may still make sense for those boundaries. In practice, however, we found that these are often difficult to setup and can be awkward or require a lot of overhead. Unless you have a really good use-case, they are likely not worth it.

Both integration and component tests still make sense with a monolith. For integration tests, the hard part is determining how much these tests should include and where to draw the lines. Similarly to microservices, the main key is to try to test a single integration wherever possible but the question becomes what you consider to be integration points. If your monolith has been kept fairly modular, this might not be difficult. Even if the code shares a codebase, if it has some semblance of divisions, you already have natural integration points. If, however, everything is entangled, this can be very difficult. The main thing is to think about what are the interactions that you worry can’t be well tested by unit tests.

Component tests can actually really shine in a monolith that has gone through the evolution I described. If you have a lot of existing end to end (or nearly end to end) tests and find yourself in a place where you really need to speed up your test runs, a good strategy can be to find ways to mock or replace the database and any other slower components with faster alternatives for the tests. It’s often the case that you can replace those components for all of the tests with a single sweeping change. While this still takes some work, it can be a good way to speed tests up without completely rewriting everything and keeping most of the coverage. In practice, component tests can have their own host of problems, such as an in memory database having bugs that the main one wouldn’t. Given that, I wouldn’t necessarily write net new component tests, but they can be a good option for existing end to end tests.

A monolith can feel impossible to add good testing to. Especially when, as is common, testing was not emphasized in the early days. That said, the easiest way to correct the situation is to make sure everyone understands the various types of tests and their importance and all tests are made as easy to write as possible. Prioritizing adding more tests or converting tests can be challenging, but if everyone has a common goal in mind, it becomes easy to slowly improve things over time with every commit.

Variety is Important

Whether you’re working with microservices or in a monolith, each of these test types have their place. If a unit test breaks, it’s very easy to isolate what went wrong. Additionally, you can run them locally with no extra setup and no internet connection (useful if you’re working on the train). They typically run very quickly and allow easily writing many tests. If they break, it’s almost always because something is actually broken (flakey tests typically happen when more things are tested together).

It’s easy, using mocks, to test error cases that you don’t expect to actually happen, and may not even be possible to test in an end to end test — such as handling a timeout from another service or a database error. Finally, it’s much easier to separate the tests so that you’re only running tests relevant to the change. For example, there’s no point in running tests for file A, much less service X if all of my changes are in file B of service Y.

On the flip side, it’s also easy for two different teams (or even two people on the same team) to make different assumptions about how something should work that won’t be caught even with 100% unit test coverage. It may not even be a miscommunication about an integration but a misunderstanding or incorrect assumption about how a database or cache works. The best way to test if an integration is working is by running a test through that integration.

To take that even further, the best way to ensure that a key use-case is taking the expected code path and every part of the integration and configuration works is to run a full end to end test. If there are key use-cases that absolutely can not break, end to end tests can offer an additional level of confidence. They can even find unhanded errors that were completely unexpected.

Sometimes I find integration or even end to end tests useful in fixing bugs. If I can reproduce the bug who’s behavior has been described to me in a test, I can then trace through to figure out what’s going wrong. Then I can fix that bug and verify that the test now passes. If it is an end to end test for an edge-case, it may not be worth keeping and checking it in, but if I delete it, it gives me confidence that the problem has been corrected.

Higher level tests are also useful for ensuring that refactoring doesn’t break anything. Because the tests are completely removed from the implementation, as long as I keep the top level functionality the same, I can delete an entire class. Something like that would break unit tests, but that shouldn’t affect end to end tests.

Clearly, no single test type is perfect. The most important thing is to make sure you’re using a variety of test types and using the correct type for the scenario you’re testing. One mistake I saw a company make was that they made integration tests easier to write and emphasized them more than unit tests. As a result, developers didn’t take the time to think through which type of test actually made the most sense and therefore wrote almost entirely integration tests. Because we were transitioning from end to end tests at the time, they felt like unit tests to us (after all, they were much smaller in scope than the end to end tests). In this particular case, they were including the integration with the database. This caused two problems. First, because of our development environment setup, these were virtually impossible to run locally which made things like developing while riding the train difficult. It also put a lot of load on our shared resources. Secondly, the actual setup of the data in mySQL was slow, so these tests were all extremely slow, especially when we had lots of them. While I think it’s important to make integration tests easy to write, it’s even more important to make sure that all test types are easy to write and that everyone understands how and when to write each.

Ultimately, you want a balance of different test types to ensure that you end up with testing that is robust, fast and has few false breaks. Finding the best balance is the tricky part and requires thinking through the trade-offs of the different test types and thinking about those in the context of the particular thing you’re trying to test. To make things even harder, the best balance for your company may not be the same as the best balance for someone else. How sensitive are you to cost? How important is it that nothing breaks?

There is no ‘right’ or ‘wrong’ type of test, only a right or wrong test for a given scenario. While it would be nice if there were a clear rule of thumb about when to write what, it’s never that easy. Educating your developers on the tradeoffs of different test types and how to write each is the best way to gain robustness.