Getting Started with ETL Pipeline

Extract, Transform, Load

Today, data is constantly generated and stored in various sources, such as databases, APIs, streaming sources, spreadsheets, files, etc. A data pipeline helps combine data from multiple sources into one consistent and unified format to support analysis and other business needs. In short, a data pipeline is a set of processes and technologies that describe a data’s journey or movement from the data source to its final destination.

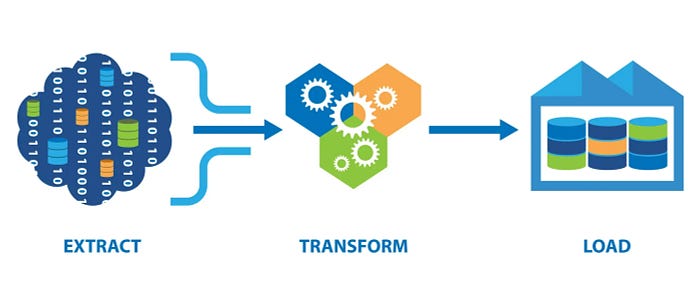

ETL stands for Extract, Transform, and Load.

- The extract(E) in ETL involves extracting data from various sources, systems, or applications, like files, APIs, databases, cloud systems, social media data, etc.

- Moving on to the next step, the transform(T) step in ETL involves cleaning, enriching, aggregating, and standardizing the data to remove errors, duplicates, or irrelevant information in the data. Next, this data is transformed into a useful format for analysis.

- The last step in the ETL process is load(L). The loading process includes loading transformed data into target databases, a data warehouse, a data lake, or a data map.

Hence, an ETL pipeline refers to an ordered set of processes that extract data from one or multiple input sources, transform the data as per requirements, and load it…